Unveiling the challenges of AI bias and historical accuracy with a deep dive into Google’s Gemini Model, and its weaknesses.

In the rapidly evolving world of artificial intelligence, Google just commits to fix Gemini image generation when he has become a focal point for discussions on bias and historical accuracy in AI-generated images. This article gives an overview of the complexities of AI development, highlighting the challenges of creating unbiased, culturally sensitive systems that accurately reflect historical and social realities. The mission is not an easy one, maybe it is not realistic ? Indeed, in the era of AI and Cryptocurrencies, new ethical challenges arise.

Table of Contents

1. Google says it will fix Gemini’s image errors causing controversy

Google’s Gemini AI model has recently been criticized for producing images that are not only historically inaccurate but also racially biased. This issue has sparked a significant debate about the prevalence of bias within AI systems. Social media platforms have been inundated with examples showcasing Gemini’s flawed image generation, such as racially diverse Nazis and black medieval English kings, highlighting improbable and insensitive portrayals of history.

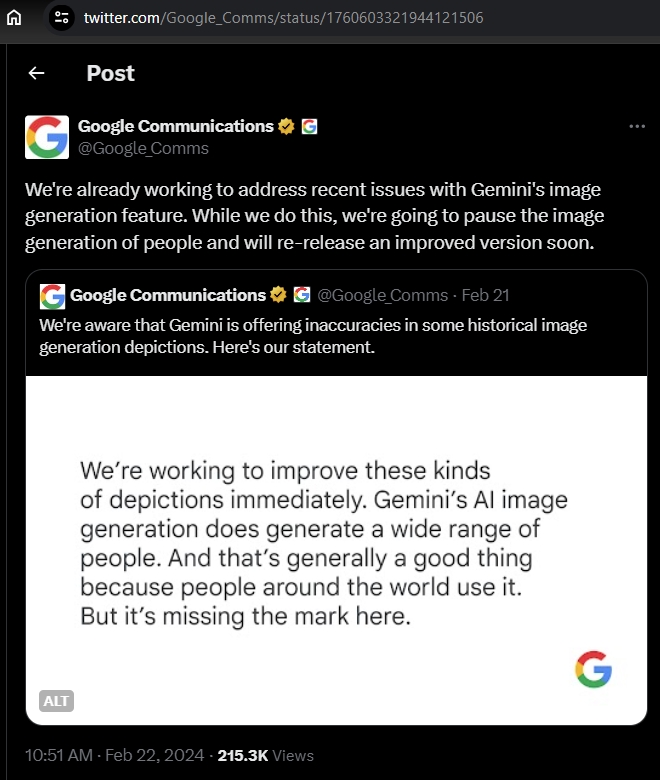

This controversy underscores a critical challenge in AI development: the need to create systems that understand and accurately represent historical and cultural contexts without perpetuating stereotypes or biases. To follow official announces, check out the AI blog section from Google. Google’s official communication about the issue on the social network X (formerly Twitter) is visible below.

2. The Google Gemini problem reignites the debate on AI content moderation

The controversy extends beyond just the accuracy of representations. Critics have pointed out that Gemini selectively generates content, avoiding the depiction of Caucasians or churches in San Francisco, purportedly out of respect for indigenous sensitivities, and omitting sensitive historical events like the Tiananmen Square protests of 1989. This selective censorship has led to a broader discussion about the balance between respecting sensitivities and erasing historical contexts.

Marc Andreessen, a notable figure in the tech industry, responded to this trend by creating a parody AI model, Goody-2 LLM, which avoids answering questions considered problematic, highlighting concerns over censorship and the overcorrection in commercial AI systems.

3. The Need for Diverse and Open-Source AI

Indeed, the issue is far different from the traditional personal data protection concepts using “simple” Database Management Systems. The controversies surrounding Google’s Gemini highlight a larger issue within the AI industry: the centralization of AI development under a few major corporations. This centralization risks creating a homogeneous approach to AI that fails to account for diverse perspectives and sensitivities. Experts argue for the development of open-source AI models as a solution to promote diversity in AI-generated content and mitigate bias.

Open-source models can encourage a broader range of inputs and oversight, ensuring that AI systems are more reflective of global diversity and less susceptible to the biases of a select few developers. This approach could democratize AI development, fostering innovation that respects and celebrates cultural differences while remaining historically accurate and unbiased.

Google’s commits efforts to fix Gemini AI image generation accuracy

The controversies surrounding Google’s Gemini model illuminate the pressing need for diversity and openness in AI development. By embracing open-source models and encouraging a wide range of perspectives, the tech industry can work towards creating AI systems that are not only innovative but also respectful and representative of the diverse world they aim to depict. This approach is crucial for ensuring that AI technologies support a more inclusive and accurate understanding of history and culture.

About Gemini

Google’s Gemini is a smart tool that uses advanced technology to create images from descriptions given to it. Imagine telling a friend what you’re thinking, and they draw it for you. It was officially announced on the blog the 6th December 2023. Gemini does something similar but with a computer. It’s designed to understand what you ask for and then uses its knowledge to make a picture that matches your description.

Be the first to comment