Aggregate and sum data with SSIS to perform the equivalent of GROUP BY in SQL using the Aggregation Transformation.

In an ETL project with SSIS, it is common to aggregate data with SSIS, from a SQL Server table to a higher level, such as data marts. That is, in practice, to calculate the sum of amounts for a given business level. To do this, there are two possible solutions, write a classic SQL query of type Group By or use the SSIS Aggregate transformation.

This tutorial explains step by step how to use the SSIS component and aggregate data without writing an SQL query, and shows also an equivalent example of MS SQL Group By.

Table of Contents

1. Prepare tables and data to aggregate using SSMS

The goal of this tutorial is to read and then aggregate data from the sales table level, i.e., dbo.Sales from this columns:

- Customer

- Month

- Year

- Product

To a higher level and store it in the aggregated sales table, called dbo.Sales_Grouped, i.e., only two columns:

- Customer

- Year

1.1 Create the sales table with the SQL script

Connect to SSMS and run this script to create the source table.

CREATE TABLE [dbo].[Sales](

[CustomerID] [int] NOT NULL,

[MonthID] [nvarchar](20) NOT NULL,

[YearID] [nvarchar](20) NOT NULL,

[Qty] [numeric](10,2) NOT NULL,

[Price] [numeric](10,2) NOT NULL,

[Sales] [numeric](10,2) NOT NULL,

[ProductID] [int] NOT NULL,

[ProductName] [nvarchar](100) NOT NULL,

CONSTRAINT [SalesPK]

PRIMARY KEY CLUSTERED (

[CustomerID], [MonthID], [YearID], [ProductID] ASC

)

);

GO

Then insert the 44 sales rows at the fine level.

INSERT [dbo].[Sales] VALUES (1, N'1', N'2022', 10.00, 879.20, 8792.00, 31, N'Babka'); INSERT [dbo].[Sales] VALUES (1, N'3', N'2021', 5.00, 312.00, 1560.00, 40, N'Unreal'); INSERT [dbo].[Sales] VALUES (1, N'6', N'2021', 9.00, 627.00, 5643.00, 27, N'Qrunch'); INSERT [dbo].[Sales] VALUES (1, N'7', N'2022', 6.00, 621.70, 3730.20, 10, N'Ambrosi'); INSERT [dbo].[Sales] VALUES (1, N'7', N'2022', 4.00, 653.10, 2612.40, 47, N'Quickick'); INSERT [dbo].[Sales] VALUES (1, N'9', N'2021', 2.00, 734.90, 1469.80, 47, N'Quickick'); INSERT [dbo].[Sales] VALUES (2, N'2', N'2021', 9.00, 895.60, 8060.40, 47, N'Quickick'); INSERT [dbo].[Sales] VALUES (2, N'3', N'2022', 11.00, 51.80, 569.80, 49, N'Menudito'); INSERT [dbo].[Sales] VALUES (2, N'7', N'2021', 11.00, 112.20, 1234.20, 19, N'Chiavie'); INSERT [dbo].[Sales] VALUES (2, N'9', N'2022', 7.00, 581.80, 4072.60, 33, N'Jans'); INSERT [dbo].[Sales] VALUES (3, N'2', N'2022', 4.00, 576.60, 2306.40, 5, N'Amoy'); INSERT [dbo].[Sales] VALUES (3, N'6', N'2021', 6.00, 551.10, 3306.60, 8, N'Gilda'); INSERT [dbo].[Sales] VALUES (4, N'0', N'2021', 7.00, 15.40, 107.80, 26, N'Lasco'); INSERT [dbo].[Sales] VALUES (4, N'1', N'2022', 2.00, 706.60, 1413.20, 27, N'Qrunch'); INSERT [dbo].[Sales] VALUES (4, N'1', N'2022', 9.00, 491.70, 4425.30, 30, N'Paldo'); INSERT [dbo].[Sales] VALUES (4, N'3', N'2022', 4.00, 810.20, 3240.80, 22, N'Bourbon'); INSERT [dbo].[Sales] VALUES (4, N'4', N'2021', 9.00, 648.90, 5840.10, 42, N'Barbacoa'); INSERT [dbo].[Sales] VALUES (4, N'5', N'2021', 7.00, 284.90, 1994.30, 5, N'Amoy'); INSERT [dbo].[Sales] VALUES (5, N'11', N'2021', 3.00, 914.90, 2744.70, 45, N'Predue'); INSERT [dbo].[Sales] VALUES (5, N'11', N'2022', 9.00, 903.80, 8134.20, 44, N'Kemps'); INSERT [dbo].[Sales] VALUES (5, N'4', N'2022', 10.00, 133.90, 1339.00, 6, N'Haystack'); INSERT [dbo].[Sales] VALUES (5, N'6', N'2021', 10.00, 940.20, 9402.00, 34, N'Amazin'); INSERT [dbo].[Sales] VALUES (5, N'7', N'2021', 7.00, 352.90, 2470.30, 32, N'Maverik'); INSERT [dbo].[Sales] VALUES (5, N'7', N'2022', 3.00, 116.40, 349.20, 7, N'Kalamata'); INSERT [dbo].[Sales] VALUES (6, N'1', N'2021', 4.00, 283.30, 1133.20, 23, N'Oatsnack'); INSERT [dbo].[Sales] VALUES (6, N'4', N'2021', 4.00, 604.90, 2419.60, 10, N'Ambrosi'); INSERT [dbo].[Sales] VALUES (6, N'4', N'2022', 5.00, 184.30, 921.50, 6, N'Haystack'); INSERT [dbo].[Sales] VALUES (6, N'5', N'2022', 9.00, 464.40, 4179.60, 18, N'Lurpak'); INSERT [dbo].[Sales] VALUES (6, N'8', N'2021', 6.00, 836.10, 5016.60, 40, N'Unreal'); INSERT [dbo].[Sales] VALUES (6, N'9', N'2022', 4.00, 254.80, 1019.20, 1, N'Boncora'); INSERT [dbo].[Sales] VALUES (7, N'0', N'2021', 7.00, 100.90, 706.30, 45, N'Predue'); INSERT [dbo].[Sales] VALUES (7, N'10', N'2021', 4.00, 161.50, 646.00, 10, N'Ambrosi'); INSERT [dbo].[Sales] VALUES (7, N'10', N'2022', 7.00, 484.50, 3391.50, 50, N'Armanino'); INSERT [dbo].[Sales] VALUES (7, N'3', N'2022', 7.00, 748.80, 5241.60, 38, N'Exo'); INSERT [dbo].[Sales] VALUES (7, N'6', N'2021', 1.00, 623.50, 623.50, 45, N'Predue'); INSERT [dbo].[Sales] VALUES (7, N'7', N'2021', 6.00, 185.00, 1110.00, 28, N'Basilico'); INSERT [dbo].[Sales] VALUES (7, N'8', N'2022', 7.00, 625.50, 4378.50, 4, N'Sartori'); INSERT [dbo].[Sales] VALUES (7, N'8', N'2022', 3.00, 125.40, 376.20, 38, N'Exo'); INSERT [dbo].[Sales] VALUES (8, N'1', N'2021', 8.00, 949.80, 7598.40, 16, N'Homekist'); INSERT [dbo].[Sales] VALUES (8, N'10', N'2021', 9.00, 944.30, 8498.70, 41, N'Baked'); INSERT [dbo].[Sales] VALUES (8, N'10', N'2022', 7.00, 578.10, 4046.70, 2, N'Master'); INSERT [dbo].[Sales] VALUES (8, N'12', N'2022', 3.00, 309.20, 927.60, 42, N'Barbacoa'); INSERT [dbo].[Sales] VALUES (8, N'2', N'2022', 4.00, 417.80, 1671.20, 25, N'Scrapple'); INSERT [dbo].[Sales] VALUES (8, N'4', N'2021', 7.00, 509.20, 3564.40, 4, N'Sartori');

1.2 Create the grouped sales table with the SQL script

Then create the table of grouped sales by year and customer. This table will be loaded by the SSIS package.

CREATE TABLE [dbo].[Sales_Grouped](

[CustomerID] [int] NOT NULL,

[YearID] [nvarchar](20) NOT NULL,

[Sales] [numeric](10,2) NOT NULL,

CONSTRAINT [SalesGroupedPK]

PRIMARY KEY CLUSTERED (

[CustomerID], [YearID] ASC

)

);

GO

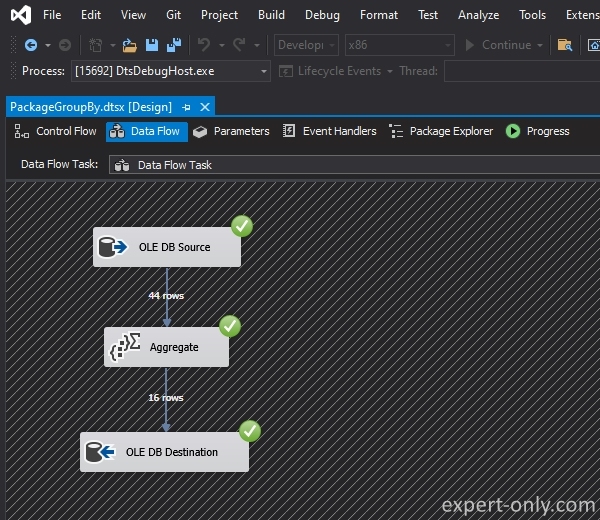

2. Create the data flow to aggregate data with SSIS

Now configure the data flow with the necessary SSIS components. The prerequisite is to have already 2 elements available in Visual Studio:

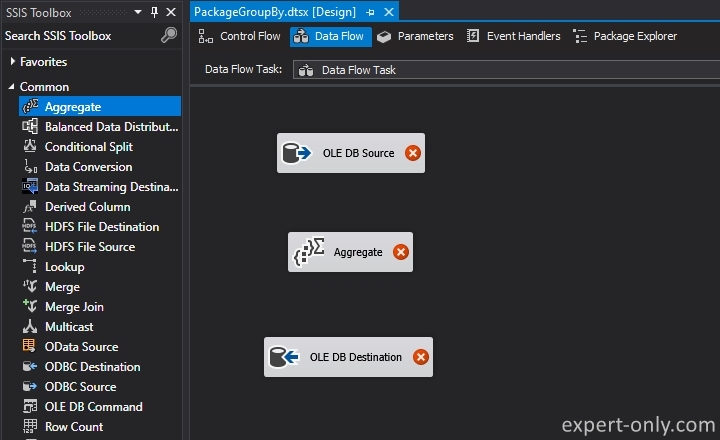

2.1 Add three Integration Services components to the data flow

In the SSIS package, add a data flow. Then add the three following components:

- An OLE DB source

- The SSIS Aggregate transformation

- An OLE DB Destination component

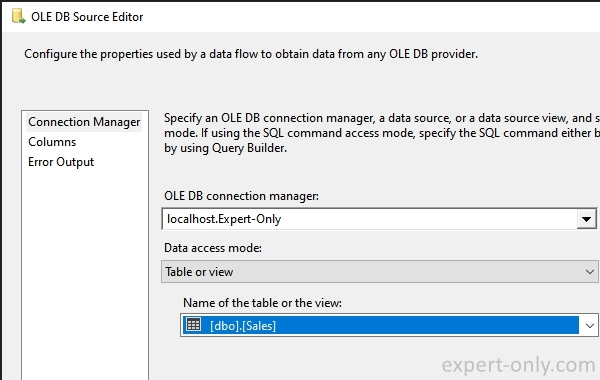

2.2 Configure the Source Table with SSIS

Open the OLE DB Source component and select the sales table created and populated in step 1.1.

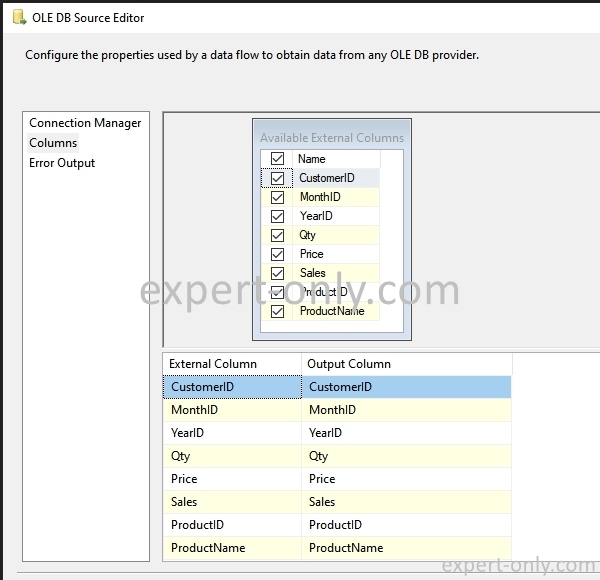

From the Columns tab, check that the columns of the table are mapped correctly.

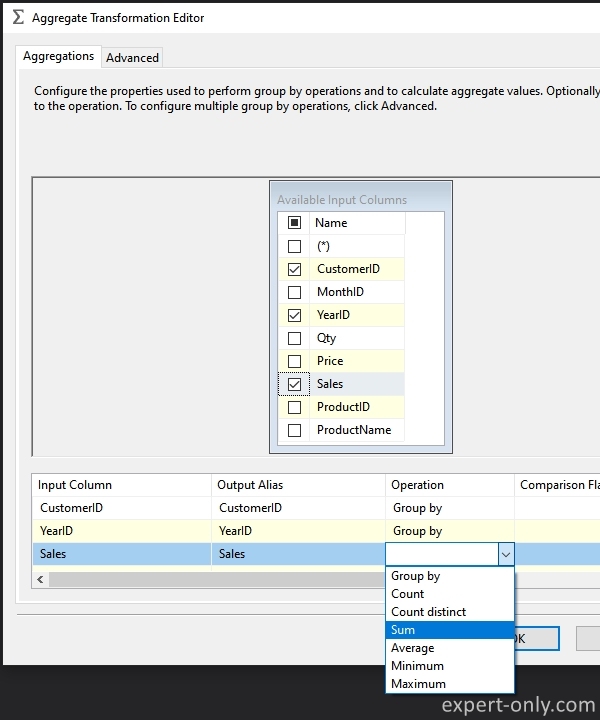

2.3 Set up the GROUP BY in the SSIS aggregation transformation

Link the OLE DB Source component to the Aggregate SSIS component. Now open the transformation and select the following columns:

- CustomerID : with a Group By operation.

- YearID : also with a Group By operation.

- Sales : with a Sum operation to calculate the sum of the sales output of the component.

The SSIS component detects data types and by default only offers aggregation operations for numeric columns.

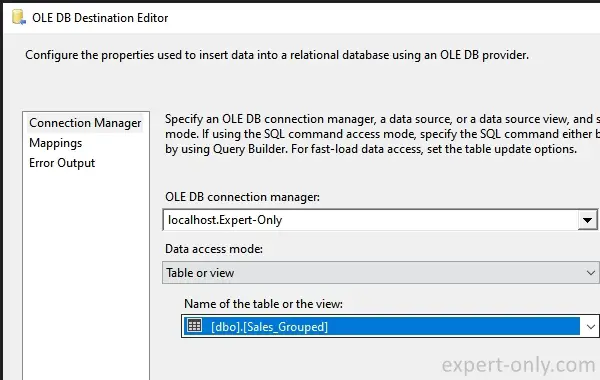

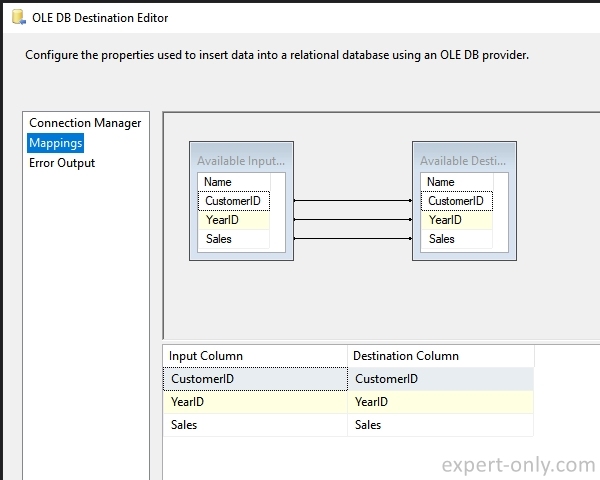

2.4 Configure the aggregated target table with SSIS

Now link the Aggregate transformation component with the target table. Choose the dbo.Sales_Grouped table.

Also check the mapping from the Mappings tab.

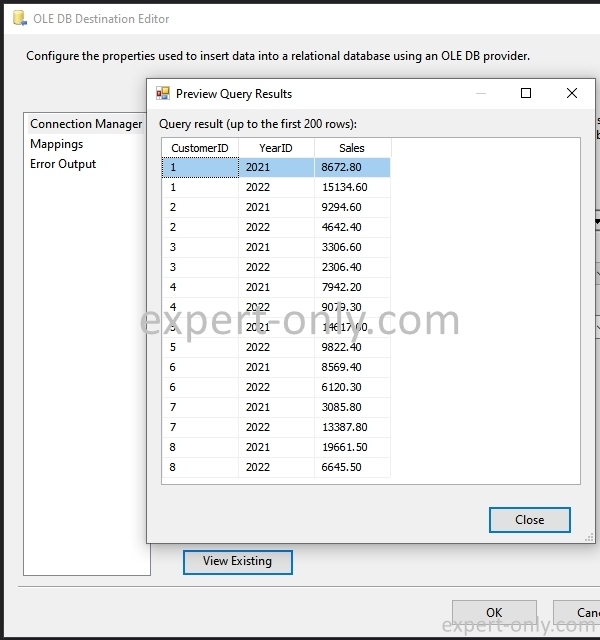

3. Run the SSIS package to aggregate and check data

Finally, run the SSIS package to aggregate the sales data to an annual level and store it in the second table. The package reads 44 source rows and aggregates the table data into 16 rows.

To check the data, two options, the first is to use the SSIS data viewer directly from the OLE DB Destination component, like this:

4. SQL GROUP BY equivalent to the data Aggregation with SSIS

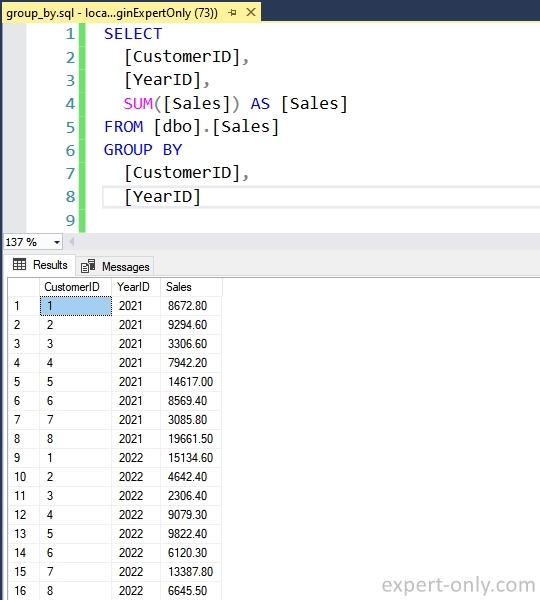

The second option is to execute a SQL query directly from SSMS to check that the data in the table is correct. Execute this SQL query with the GROUP BY function which is the equivalent of the SSIS package operations.

SELECT [CustomerID], [YearID], SUM([Sales]) AS [Sales] FROM [dbo].[Sales] GROUP BY [CustomerID], [YearID];

5. Conclusion on the SSIS Aggregate transformation

This SSIS tutorial explains step by step how to aggregate data and calculate a sum with a GROUP BY on a table without writing a SQL query. The component is convenient for simple queries, in more complex cases it is better to write a SQL query in the OLE DB Source component. In order to keep packages simple and easy to maintain.

Be the first to comment